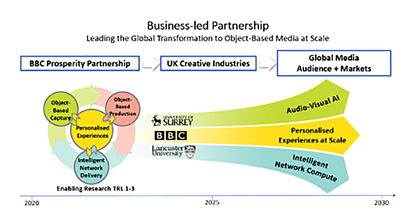

We are delighted to announce the start of a new five-year with the universities of and to develop and trial new ways to create and deliver object-based media at scale. Through groundbreaking collaborative research, we aim to transform how our audiences can enjoy personalised content and services in future. The goal is to enable scaled delivery of a wide range of content experiences - efficiently and sustainably - to mainstream audiences via multiple platforms and devices.

What is a Prosperity Partnership?

Prosperity Partnerships are business-led research partnerships between leading UK-based businesses and their long-term strategic university partners, with match funding from , part of , for the benefit of the wider UK economy and society.

Why now?

The UK media sector is in the midst of a transition from broadcast infrastructure and services to IP. In addition to the challenge of maintaining the scale and resilience that audiences expect from broadcast, we also need to meet the expectations that audiences have for internet-delivered content, which they expect to be personalised and customisable to their needs. We’ve already done a lot of work on object-based media which lays the foundation for this, and now need to make it possible to create and distribute this kind of content on a large scale.

This partnership allows us to research and build those capabilities, giving the whole UK an advantage and creating deeper value for users.

What will this partnership tackle, and why?

The partnership will allow us to research the means to create and deliver customised programmes and content using object-based media at a scale not currently feasible.

- It will enable us to make the production process much simpler and lower-cost. , we will develop (such as individual audio tracks and distinct ‘layers’ from a video scene) and create metadata describing the scene. This will allow the content to be assembled to meet particular user needs (such as accessibility), tailored to specific device characteristics (like screen size) or audience interests and context. There is a significant overhead to achieve this in the current production process, as it is geared towards creating ‘finished’ programmes rather than the individual components needed to produce flexible content.

- It will also address the challenge of delivering and rendering customised content. Working with Lancaster University, we will develop approaches to intelligently distribute the processing and data through the delivery network, making the best use of resources in the cloud, edge-compute nodes, and the audience’s networks and devices. To render customised content at present, high-end audience devices or large amounts of cloud computing resource are required, making it expensive and impractical to reach large audiences.

How will this build on or previous work on object-based media?

So far, our trials have relied on a hand-crafted production process, for example, keeping many audio tracks or multiple camera views and graphics layers throughout the production process and manually creating the control metadata needed to determine how to combine them for a particular application. This works for making individual trials but not for producing content at scale.

- ���˿��� News - Click 1,000: How the pick-your-own-path episode was made

- ���˿��� Taster - Try Click's 1000th Interactive Episode

- ���˿��� R&D - How we Made the Make-Along

- ���˿��� Taster - Watch Instagramification

The distribution and compute for these trial experiences have often relied on the user having a sufficiently powerful device. This could be a high-end smartphone or laptop running the latest browser, capable of decoding multiple video and audio streams at the same time while mixing or switching between them. However, in the future, we will build on our Render Engine Broadcasting work. This has developed ways of creating and delivering personalised object-based experiences to our audience and experimented with cloud-based rendering of experiences.

These have included location-based VR, where we demonstrated the ability to delegate object-based media processing to the cloud (when necessary), meaning more people will be able to use these new media experiences on personal devices that are not as technologically advanced. The challenge is to make the best use of available network and computing resources without incurring significant cloud compute costs or network congestion.

How will the Prosperity Partnership help take this further?

The partnership will let us address these two key challenges in making object-based media work at scale:

- Enabling real-time object extraction of audio-visual objects using AI, without having to capture content in a way specifically to support object-based media, which increases costs and can be impractical for many kinds of programmes

- Enabling a distributed intelligent network compute architecture that will allow people to get an object-based experience without needing the latest consumer equipment and without needing massive cloud compute infrastructure.

The ���˿��� is ideally placed to lead this transition, thanks to its access to audiences, world-renowned programme archives and data for testing, and its track record for impartiality, bringing the industry together, and leading standards development.

We co-created this partnership with two world-leading academic groups with whom we already have a strong track record of successful collaboration:

- The audio-visual AI expertise of the University of Surrey, whose we have been collaborating with for over 20 years, for example, in the as a part of our audio research partnership. We won a for an object-based audio experience.

- The software-defined network distributed compute expertise of . The university previously collaborated with the ���˿��� in an EU project on the internet delivery of media - .

In addition to working with these universities, a network of 16 companies (ranging from SMEs to large multinationals) have agreed to work with the partnership through methods such as sponsoring PhD students, helping to evaluate prototypes, and providing access to facilities. We’re looking forward to starting this exciting programme of work to deliver the next generation of experiences to our audiences.

We are hiring! We are currently recruiting for postdoctoral researchers and research software engineers, so if you would like to join this partnership at the very start, please visit: and .

Watch this space for further developments!

- -

- ���˿��� R&D - Partnerships

- ���˿��� R&D - Object-Based iPlayer - Our Remote Experience Streaming Tests

- ���˿��� R&D - StoryFormer: Building the Next Generation of Storytelling

- ���˿��� R&D - 5G Smart Tourism Trial at the Roman Baths

- ���˿��� R&D - New Audience Experiences for Mobile Devices

- ���˿��� R&D - Object-Based Media Toolkit

- ���˿��� R&D - 5G Trials - Streaming AR and VR Experiences on Mobile

- ���˿��� R&D - Where Next For Interactive Stories?

- ���˿��� R&D - Storytelling of the Future