成人快手 Research & Development has been investigating how artificial intelligence (AI) technologies such as computer vision and machine learning can be applied to assist in the production of natural history programming. During this year鈥檚 Springwatch, we collaborated with the 成人快手 Natural History Unit (NHU) to test the use of the systems we鈥檝e developed to help monitor some of the remote wildlife cameras. Our system monitored the cameras for activity and logging when it happened and making a recording.

One of the unique challenges of natural history programming compared to other genres of television is the lack of control over the subject matter. Unlike drama or documentary featuring humans, the animals generally can't be asked to perform on demand, and their behaviour can't always be predicted. As such, the time spent preparing or waiting for a shot compared to the amount of useful recording is very high. The 'shooting ratio', i.e. the proportion of total recordings compared to the length of the final programme, of natural history is among the highest in all television production.

Camera traps

There are opportunities for technology to assist with these issues in the production process. One of the common approaches is to make use of 'camera traps' - cameras left out in the wild that are automatically triggered by the presence of an animal. There are commercial examples available, often known as trail cams, which are popular with ecological researchers and hunters. Broadcasters make use of these trail cams as well as more bespoke systems with more sophisticated cameras. These can be left out for months at a time and autonomously collect footage. The trigger for recording is usually an electronic component such as an infrared sensor, similar to the type used in burglar alarms. These sensors are designed to use as little power as possible because one of the key limiting factors on how long the traps can stay out in the wild is how long their batteries can last. However, they are very simplistic in their triggering so can often be triggered erroneously by other changes in the scene such as plants moving in the wind or the sun coming out.

At 成人快手 R&D, we have previously investigated how to make these camera traps more intelligent. One project involved building a unit with a camera attached to a small computer which constantly monitored the video from the camera to determine when animals have appeared in shot. This allowed us to use computer vision techniques to filter out unwanted triggers such as moving plants and meant we were able to combine the data from additional sensors such as an IR camera. The technical limitation of battery life, however, increasingly becomes an issue with these more sophisticated techniques. The took a very similar approach when building a camera trap as part of their , and they offer detailed instructions for those interested in building their own camera setup.

- 成人快手 R&D - Intelligent Video Production Tools

- 成人快手 Winterwatch - Where birdwatching and artificial intelligence collide

- 成人快手 World Service - Digital Planet: Springwatch machine learning systems

Springwatch

One of the principle features of the 'Watches' (Springwatch, Autumnwatch and Winterwatch) are their wildlife cameras. Specialist teams place a series of cameras out in the wild to capture animals' behaviour in their natural environment. Springwatch, lasting three weeks, is the largest production and could have up to 30 cameras over a series, many giving rarely seen views such as the insides of birds' nests. Without the power restrictions of the camera traps, these cameras can produce video round the clock. A team of zoologists monitor the feeds, keeping a record of the activity and extracting recordings of moments of interest. Viewers can then follow the story of the featured animals through regular updates in the programme and through live streaming over the internet throughout the run.

For this year's Springwatch, the 成人快手's cameras were joined by a collection of live wildlife cameras from partners around the UK and those cameras were offered as an additional public stream. With no extra staff or facilities available to closely monitor and record the partner cameras, we have been investigating how we could apply the technology we've been developing to perform these tasks automatically. By doing this, we were able to extract in their live shows.

How it works

Developing our work with intelligent camera traps further, we have been investigating how machine learning (ML) can be applied to monitoring video to extract useful information.

When presented with a video, there are various ways to use computer vision techniques to determine whether there are moving objects in the scene - which might then be activity a viewer would be interested in seeing. A common technique is background segmentation - by learning what the background looks like we can detect differences in the image and so notice when elements of the scene are moving. The 'background' can be a probabilistic model such as a Gaussian mixture model rather than just a 2D image. This allows it to store likely variation in the background appearance and so our background can know that, for example, an area of the image with leaves moving in the wind might have variation in appearance. However, a significant change in appearance, such as an animal walking into the picture would be outside the acceptable variation of the background, and we can detect this as a moving object. It was techniques such as these that we applied to our intelligent camera traps.

Knowing there is movement in a scene, unfortunately, doesn't give much information about what is happening. If the camera moved or there was a significant change in the weather causing plants or other objects to move then our monitoring system would trigger. But even with this trigger, we wouldn't actually know if there were animals present. This is where machine learning starts to help.

The first tool we apply to the video is an object recognition system that is designed to detect and locate animals in the scene, and you can see examples of it in action in the image below. The tool we've built is based on the that runs on the Darknet open-source machine learning framework. We've trained a network to recognise both birds and mammals, and it can run at just fast enough to find and then track animals in real-time on live video. The data generated by this tool means we can be more confident that an animal, rather than some other moving object, is present in the scene and by tracking we can know how long a particular animal is visible. Using this machine learning approach, we can move from knowing 'something has happened in the scene' to 'an animal has done something in the scene'.

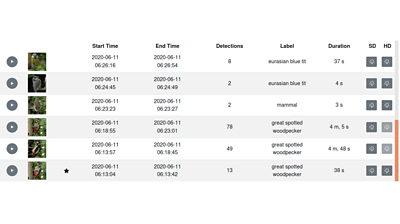

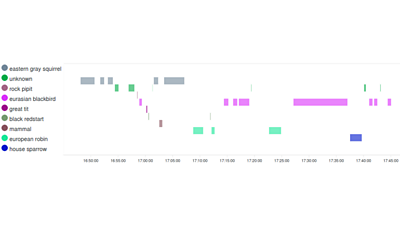

Combining the motion and animal detection tools allows us to detect 'events' in the video where animals enter or move around the scene. We store the data related to the timing and content of the events, and we use this as the basis for a timeline that we provide to members of the production team. The production team can then use this timeline to navigate through the activity on a particular camera's video. We also provide clipped up videos of the event, one a small preview to allow for easy reviewing of the content and a second recorded at original quality with a few seconds extra video either side of the activity. This can be immediately downloaded as a video to be viewed, shared and imported into an editing package.

To make this timeline as useful and descriptive as possible, we also extract images from the video to represent the animals that have been detected in the event. These are presented to the user. The images are also passed onto to a second machine learning system which attempts to classify the animal's species. The image classification system uses a convolutional neural network (CNN), specifically the . If the system is confident of an animal's species, then it will label the photograph and also add the label to the user interface. Adding this extra layer of information is intended to make it easier for a user to find the videos they're looking for - particularly if one camera could have picked up multiple different species. We now know 'a particular species of animal has done something in the scene'.

One challenge in recognising the species of animals is doing so when the animal won't pose nicely for the camera. Machine learning systems are only as good as the training material they're given, which in this case is many hundreds of animal photographs. It's natural for photographers to only take and retain good photos where the animal is framed well and clearly visible - but in our scenario, the animals are free to wander in and out of shot. This can mean the system has to try and classify many different views of the animals and it clearly performs better when an animal poses as if for a portrait than when it shows it's rear to the camera! To help us with this problem, we can make use of many hours of footage from previous editions of the Watches and use images from that footage as our training set - animal rears included.

As well as providing a tool for navigating through media, the system can be used as a data collection tool to give insight into the behaviour of animals in the scene, logging their time spent and the number of visits. We can then visualise this data on a graph.

The clips extracted by our system from the partner cameras were used on the live shows put out by the Springwatch Digital team via the 成人快手 website and .

What next

The situation created by the coronavirus pandemic forced major changes to how productions operate. We had to very quickly change our system from one based on-site in the outside broadcast truck to one that operated remotely in the cloud. But this has created opportunities to apply our system to media from many different sources. This flexibility is something we want to pursue so that it can remain available as an easy to use service that can be called on by the NHU productions when they need it.

We also aim to make the system more intelligent, so it can provide more useful information to the production team about the clips it makes. We've begun some early investigations into techniques that can recognise or classify the behaviour or 'interest' of video clips. We hope to be able to make these improvements and test the system again by the time of Autumnwatch later in the year.

- -

- 成人快手 R&D - Intelligent Video Production Tools

- 成人快手 Winterwatch - Where birdwatching and artificial intelligence collide

- 成人快手 World Service - Digital Planet: Springwatch machine learning systems

- 成人快手 R&D - The Autumnwatch TV Companion experiment

- 成人快手 R&D - Designing for second screens: The Autumnwatch Companion

- 成人快手 Springwatch

- 成人快手 R&D - Interpreting Convolutional Neural Networks for Video Coding

- 成人快手 R&D - Video Compression Using Neural Networks: Improving Intra-Prediction

- 成人快手 R&D - Faster Video Compression Using Machine Learning

- 成人快手 R&D - AI & Auto Colourisation - Black & White to Colour with Machine Learning

-

Immersive and Interactive Content section

IIC section is a group of around 25 researchers, investigating ways of capturing and creating new kinds of audio-visual content, with a particular focus on immersion and interactivity.